Written by: Amur Pal

Title:- High-Throughput Millimeter-Scale Pin Defect Detection: A Case for Semantic Segmentation

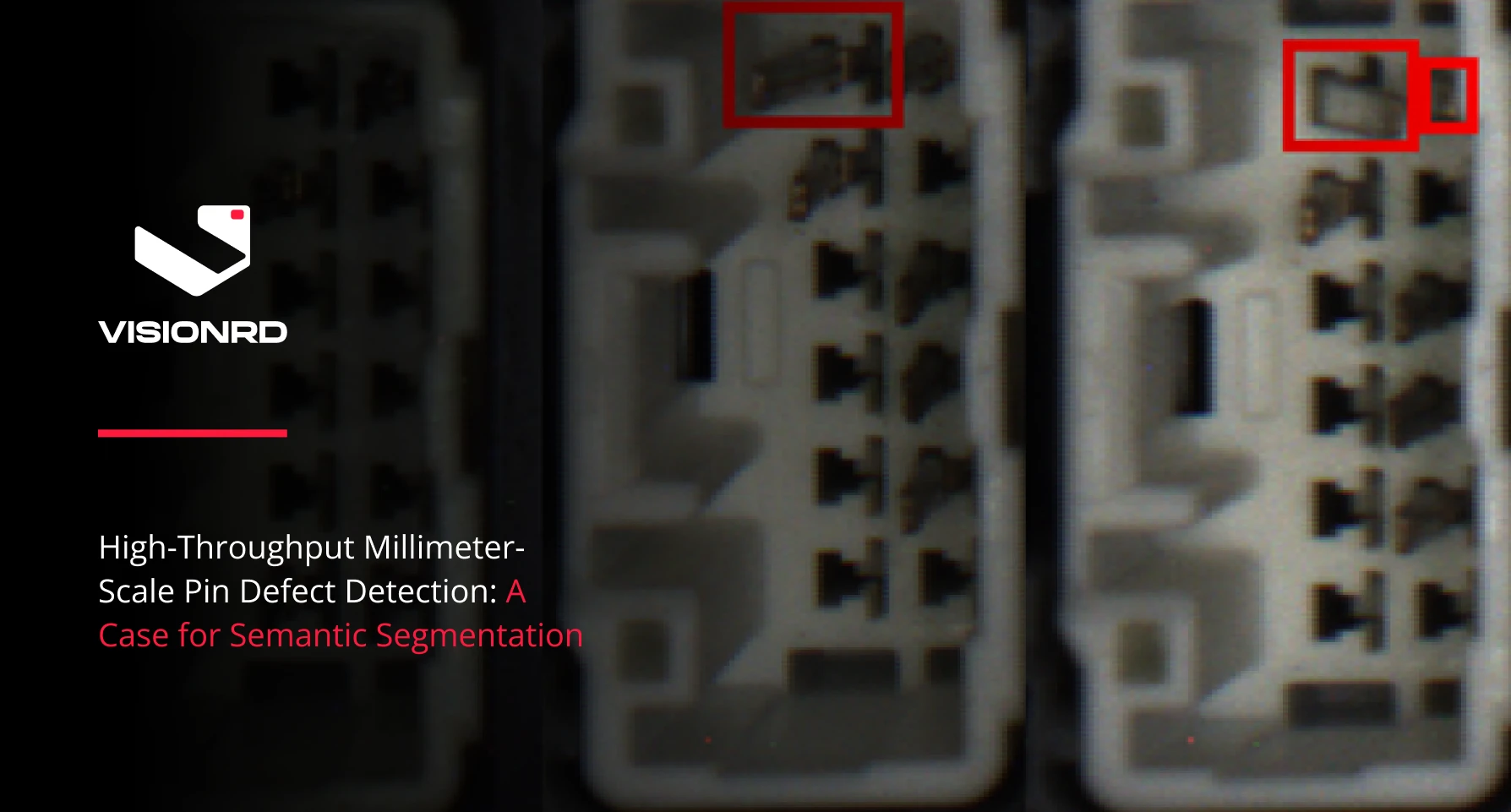

Defect detection is a crucial part of automating inspection tasks in production lines. At VisionRD, we develop AI-powered inspection systems that can detect defects in a range of automotive parts. However, the key part of inspection is to ensure that the system not only works in real-time but also makes highly accurate predictions, ideally surpassing human vision.

In this post, I will walk you through the development and deployment of one of such systems, where we solved the problem of detecting pin defects at a millimeter scale. Moreover, we’ll discuss the different approaches we tried, and how we optimized the solution for real-time deployment.

Common Pin Defects

The use case is a car’s fuse box with over 60 fuses connected to different components, and usually 3 pin terminals all with different shapes and sizes. The fuse box below shows 3 of them highlighted in red, each of which has a different number and shape of pins.

Pin terminals in the Fuse Box

Each of these terminals may have defects like bent or missing pins, which at such a small scale are incredibly difficult and time-consuming to identify for human operators. Let’s take a look at some of the pin terminals individually and see what kind of defects we can expect.

Defective Pins

Approach 1: Structural Similarity Index

The first approach to solving the pin defect problem is to apply traditional computer vision techniques on the terminals.

Assuming that we have the terminals isolated, we can take a set of reference terminals which are not defective. These references serve as the ground truth against which all incoming pins will be tested. The testing method we opted for is the Structural Similarity Index Measure, which gives the similarity index between two images. It is formulated as follows,

Source: Wikipedia

where

Source: Wikipedia

This measure is implemented in the Scikit-Image library and works as follows.

Source: Skimage

However, for our problem, we discovered that converting the terminals from BGR to HSV first gives the most robust similarity scores across the channels.

HSV terminals with 02 Bends

We proceed by separating each row of two adjacent pins and comparing them with the ground truth using SSIM, we get similarity scores of 0.235, 0.314, and 0.599 across the HSV channels, respectively.

Defective

Ground truth

However, the problem with this approach is that it does not generalize across different terminals very well. Given that the lighting conditions are almost exactly the same between the bent rows and the ground truth, the brightness channel almost always gives too high of a similarity index which makes the results meaningless.

Testing over three different pin terminals, an accuracy of 40.3% is obtained, which is obviously substandard. We experimented with single-channel grayscale images as well, but to little avail, as the problem proved too difficult for traditional computer vision methods to be sufficient.

Approach 2: Detector + Classifier

The next attempt at identifying pin defects consisted of using a state-of-the-art object detection model to get the crops of each individual pin present in the terminals and using a classifier to predict whether or not it was defective.

Good Pins

Not Good Pins

This is called a 2-step approach, and while the classification task was fairly simple to achieve with a ResNet18 or MobileNet, it was impossible to train an effective detector at such a small scale. Thus we abandoned this approach too, or perhaps it abandoned us.

Approach 3: Semantic Segmentation

Semantic segmentation with a powerful model like DeepLabV3 or U-Net was the last resort, and it worked.

Dataset

A dataset of ~100 images, 30 for each terminal labelled with “Good” and “Not Good” masks is used. Although It is crucial to ensure that the lighting conditions and camera focus are pitch-perfect, the dataset overall should represent everything the model should expect to see in production. For this purpose, we use Albumentations to add random translation, rotation, and scaling to the training data.

Training

The model is trained with the following hyperparameters.

The visualization below shows the learning of the model over the epochs. It shows how features are learned and the two Good and Not Good classes are separated as the model gets into more complex and deeper feature spaces.

Results

We stop training when the prediction masks show a clear and robust distinction between the good and not-good classes in the validation set. Next, we test the model and look at the generated masks.

All Not Good Good

Furthermore, we can get the number of pins by drawing the contours of the masks on the image itself and counting the number of good and bad contours found.

Optimization

Next, we use TensorRT to optimize the model for NVIDIA GeForce 1660 and NVIDIA Jetson Orin Nano and experiment with FP16 and INT8 implementations of the model. Other optimizations such as constant folding, layer fusion, precision reduction, quantization, pruning, graph optimization, etc. are also applied to get the quickest possible execution time with a tiny drop in accuracy.

We get a model latency of 20 milliseconds using TensorRT with a 2x model size reduction and 7x inference speedup.

Conclusion

In conclusion, VisionRD's journey to develop an AI-powered defect detection system for millimetre-scale pin terminals in automotive fuse boxes involved experimentation with various approaches. Traditional computer vision techniques and object detection models fell short of achieving the necessary accuracy. However, the adoption of semantic segmentation, combined with careful dataset curation and hardware optimization, led to a successful solution. This system now offers high-precision defect detection, surpassing human capabilities, and meets the demands of real-time deployment in automotive production lines.