Written by: Amur Pal

Title:- Advanced Driving Assistance System (ADAS) Optimized for Pakistani Roads

Introduction

The World Health Organization (WHO) reported in 2020 that Pakistan was ranked 95th highest on the list of countries with the most road traffic accidents and deaths. However, improving city infrastructure and urban roads is only one part of the solution — the other being safer, smarter, and better vehicles. According to the National Safety Council (NSC), ADAS features like automatic braking, cruise control, lane keeping assist, speed limit alerts prove to be crucial safety measures, and save up to 20,481 deaths every year, which is 62% of the total road traffic deaths.

With Vision Drive, we propose an Advanced Driving Assistance System (ADAS) that aims to not only reduce the number of critical road accidents and their severity, but one that works exceptionally well on Pakistani roads in particular. In this article, we will explore in detail the technicalities of our AI models, the data we use, how we train them, and the features Vision Drive has to offer.

What is ADAS?

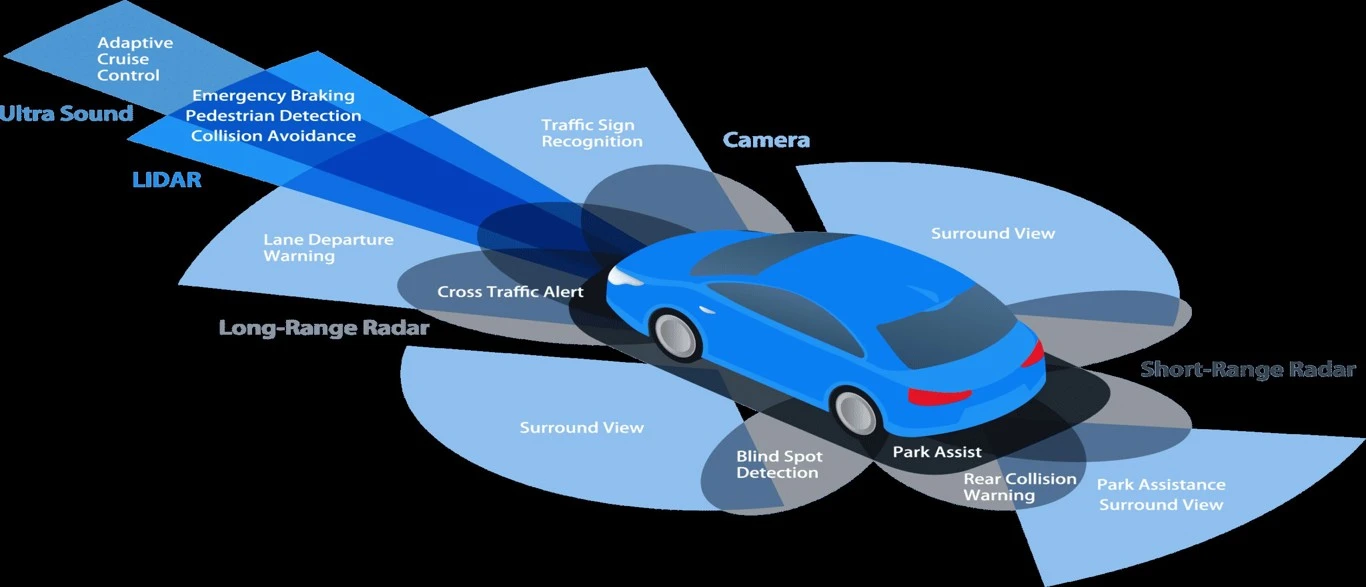

Advanced Driving Assistance System (ADAS) refers to a set of innovative technologies integrated into vehicles to enhance their safety and performance on the road. These systems are designed to provide drivers with improved situational awareness, assist in decision-making, and help mitigate potential risks and accidents. Unlike fully autonomous vehicles, ADAS is aimed at assisting drivers rather than replacing them, promoting a safer and more comfortable driving experience.

ADAS encompasses a wide range of features that utilize sensors, cameras, radar, and other technologies to monitor the vehicle's surroundings and gather real-time data. These data sources enable the system to detect potential hazards, recognize road signs, pedestrians, and other vehicles, and respond accordingly. The core objective of ADAS is to reduce the likelihood of collisions, minimize the severity of accidents, and ultimately save lives. By providing automated responses such as automatic braking, lane departure warnings, adaptive cruise control, and more, ADAS empowers drivers to make safer choices while driving.

Image credit: https://caradas.com/adas-calibration-center-salt-lake-city/

As technology continues to advance, ADAS capabilities become increasingly sophisticated. These systems not only enhance safety but also contribute to improved driving comfort and efficiency. From assisting with parking maneuvers to optimizing fuel consumption through predictive acceleration and deceleration, ADAS is transforming the driving experience. In the context of Pakistani roads, where unique challenges and road conditions exist, a tailored ADAS solution like Vision Drive can play a pivotal role in addressing the nation's road safety concerns and improving overall traffic management.

Vision Drive

Let’s go through the functionality of our ADAS product in detail and take a look at how everything works under the hood!

- Building a Dataset

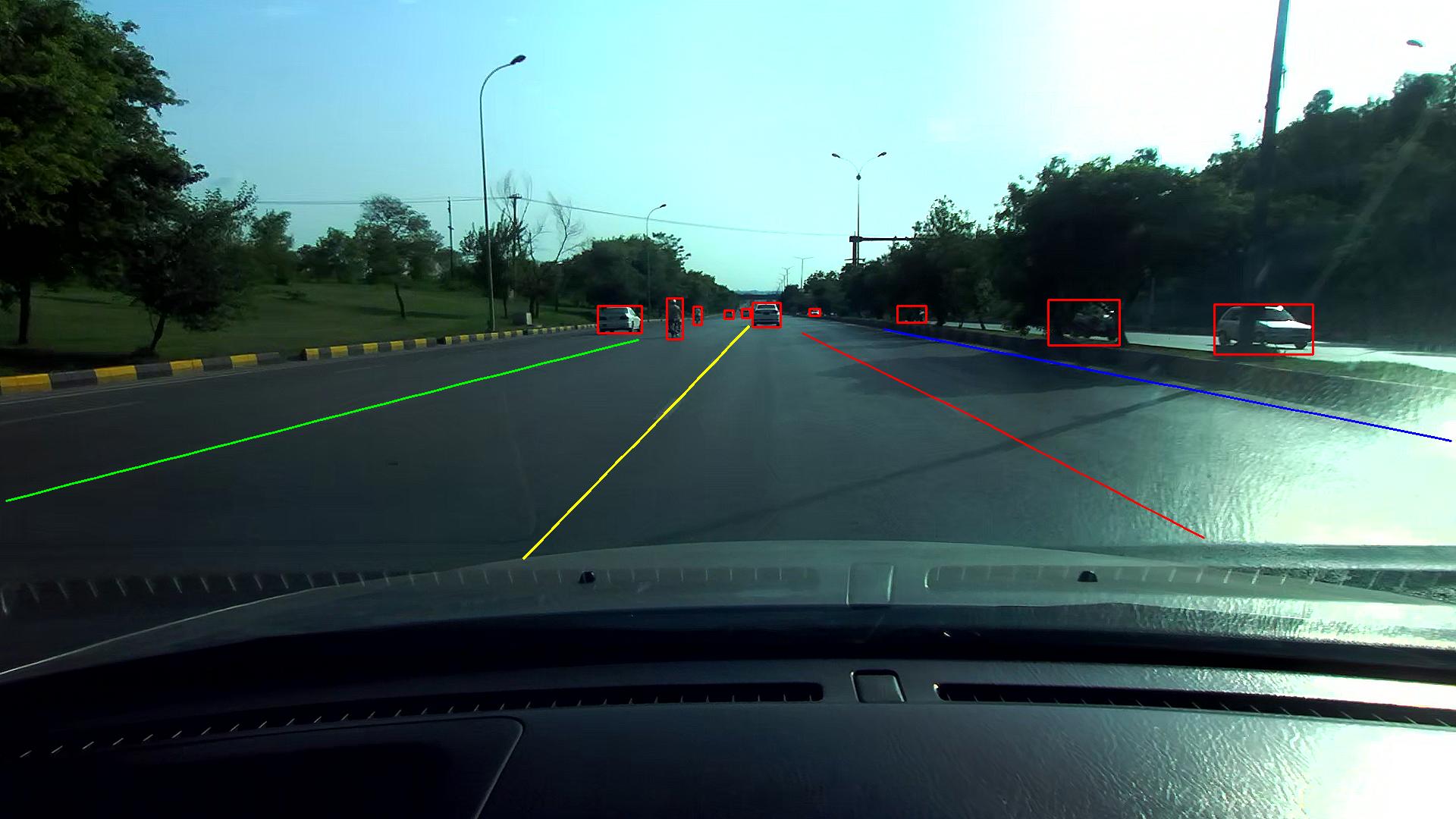

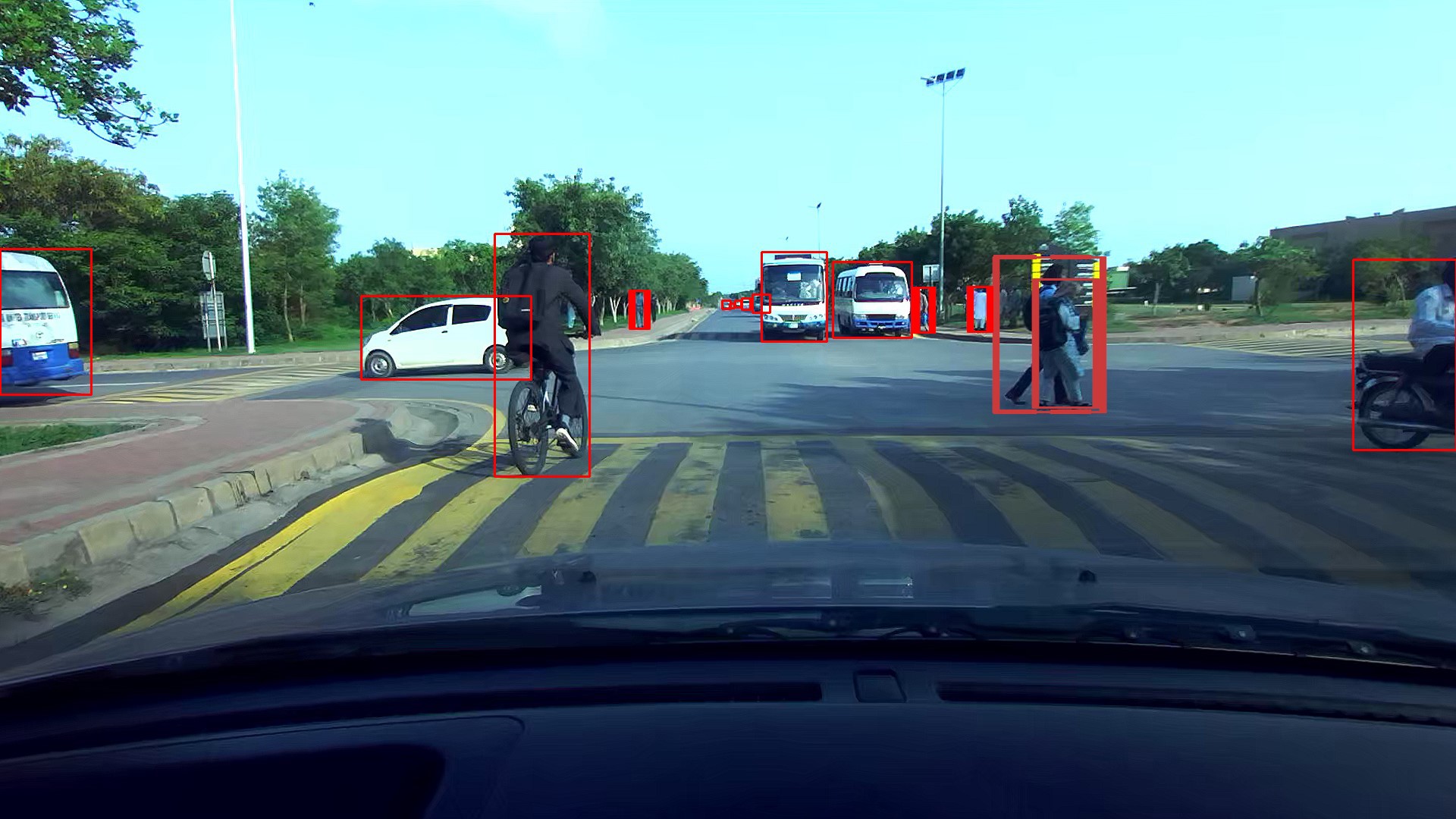

We use a stereo camera mounted at the rear-view mirror to collect 2D images and depth-maps of Pakistani roads at a rate of 30 frames per second. We ensure that the data we collect represents a realistic driving experience in order for the model to adapt and perform well in all kinds of situations. This is done by gathering a diverse dataset, with proportionate amounts of samples belonging to broad highways to crowded streets and tight alleyways, with dim or sometimes no lane markings at all.

This data is sent in real-time to an AWS server, where it is later pre-processed and configured for our use-case. In addition to vehicles, pedestrians, and traffic signs, our dataset includes a number of Pakistan-specific classes, such as auto rickshaws, wheelcarts, motorbikes, riders, and more.

Currently, we collect data from Islamabad, Rawalpindi, Karachi, and Lahore and plan to expand further to other cities as well, so the model can generalize all over the country.

- Model Architecture

Object Detection

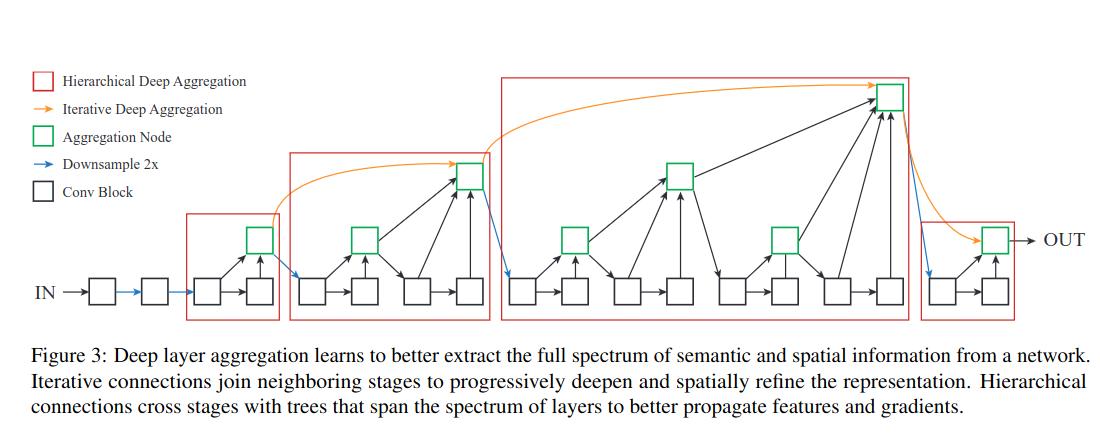

We take a multi-task, one-model approach to solve the problem, thus meaning we have only one model that does multiple detection tasks, i.e. object detection and lane markings. This is achieved by using a strong feature extraction backbone of the 34-layer Deep Layer Aggregation (DLA) model. The backbone consists of hierarchical and iterative layer aggregation and feature pyramid networks (FPNs) to learn features at different scales of the receptive fields.

Image credit: https://arxiv.org/pdf/1707.06484.pdf

It is pretrained on the ImageNet 2012 dataset and outperforms all state-of-the-art models on most classes, with a mean IoU of 73.5 on CityScapes making it the best in-class model available currently.

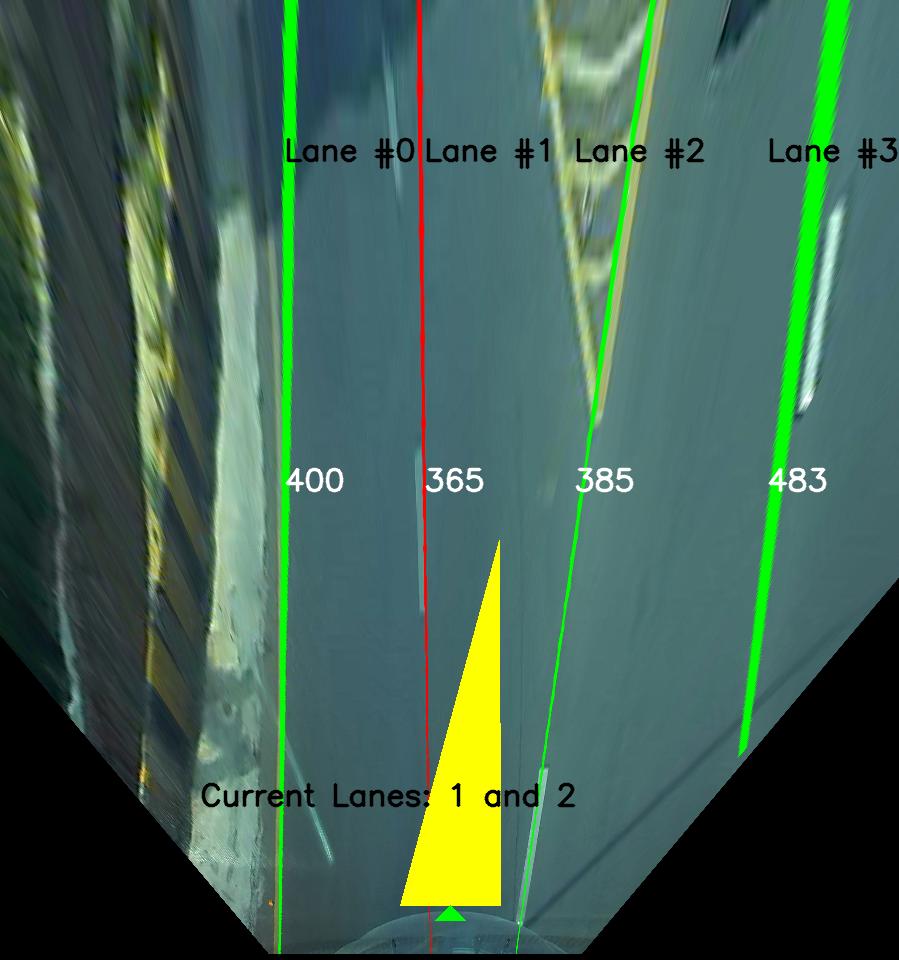

We developed a lane detection system using a Feature Pyramid Network (FPN) architecture, with a custom-designed FPN neck. By employing post-processing techniques, we accurately determined the lane markings in the image, establishing their positions relative to the host vehicle. Our approach was enhanced by integrating a DLA34 backbone, which played a crucial role in achieving remarkable performance. Notably, our model surpassed the capabilities of contemporary lane detection networks, achieving an impressive F1 score of 0.847 when evaluated on the challenging CULane dataset.

- Results

We finetune our architecture on our Pakistani roads dataset and achieve a mean average precision (mAP) of 75% for object detection and an F1 score of 0.95 on the lane detection task (IoU = 0.5).

- Features

In addition to detecting road lanes and objects, we go a step further and introduce sophisticated ADAS features into the system as well. These include but are not limited to:

- Real-time collision avoidance with autobraking

- Traffic sign recognition (including signs in Urdu)

- Speed limit alerts

- Lane-keeping and centering assist

- Lane departure warnings

- Parking assist with bird’s eye view of surroundings

Conclusion

Summing up, Vision Drive provides an enhanced driving experience, both in terms of safety and performance. We have a state-of-the-art deep learning model that is optimized for our environment and produces excellent results on Pakistani roads. Furthermore, we achieve a latency of 55 milliseconds, thus making the entire ADAS faster than real-time with over 30 frames per second. We plan to share our code optimization and hardware acceleration techniques in another blog soon.